IMA/ITP Documentation | Fall 2024

Syllabus

sudo passwd -l root logout At that point, I logged back in as the new userTo upgrade the Linux Os of this fresh install, I used the apt tool (Advanced Packet Tool). This is best practice on a new or seldom used Linux OS,

ssh YOUR_USERNAME@YOUR_IP_ADDRES

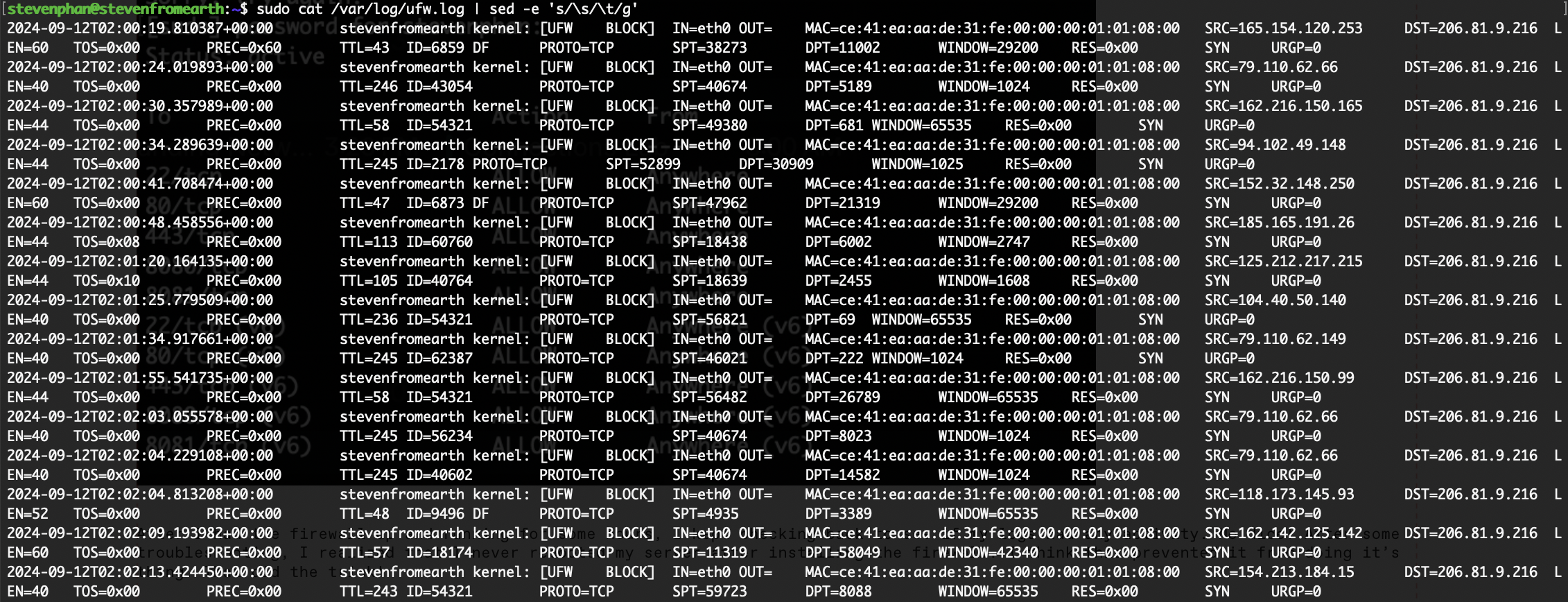

sudo apt upgrade Critical for any web server is a firewall to protect it. I opted for UFW (Uncomplicated Firewall). sudo apt install ufwInstalled some network tools.

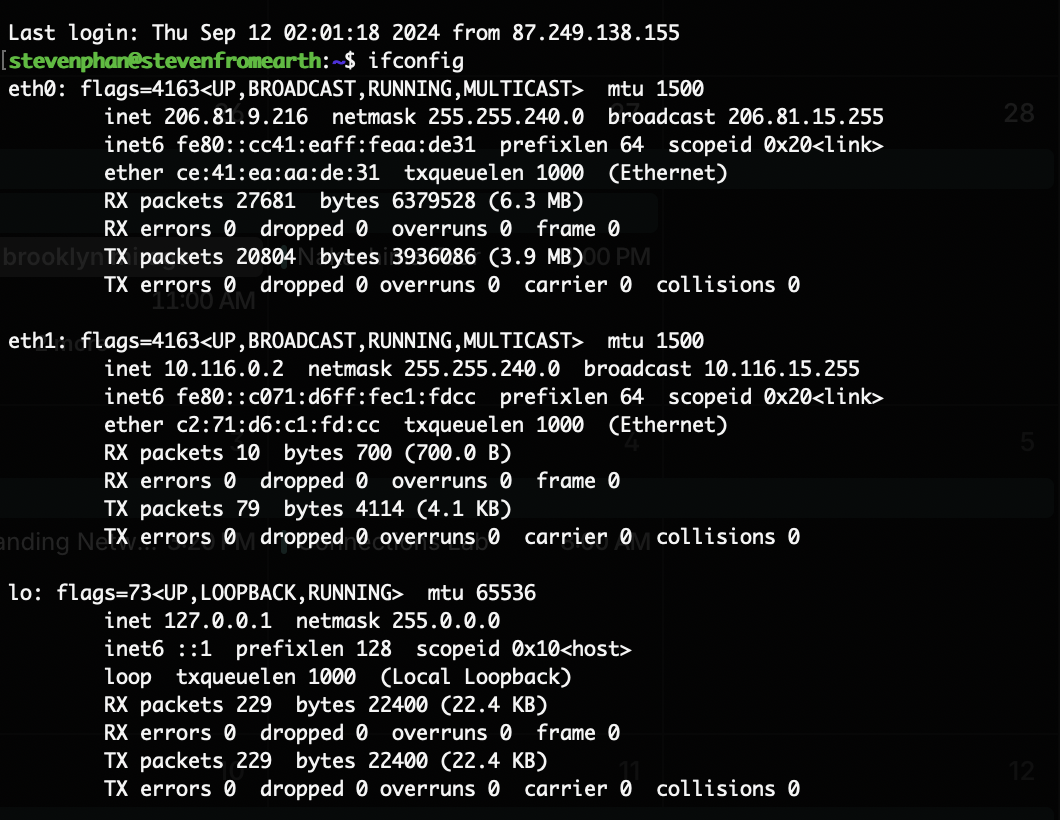

sudo apt install net-toolsA useful tool for applications like this is ifconfig which allows you to see the status of any network interfaces on the server.

sudo apt install nodejsFrom here I jumped into some config of my firewall.

$ sudo ufw default allow outgoing $ sudo ufw default deny incomingI enabled TCP connections on port 22, the default ssh port.

$ sudo ufw allow sshBecause I plan to use this as a web server, I enabled HTTP and HTTPS protocols. In addition to the application (http and https), I enabled the transport protocols as well.

$ sudo ufw allow http/tcp $ sudo ufw allow https/tcpI enabled settings for typical node.js development. This allows for custom server development and blocks ports that might make me vulnerable to an attack like flooding UDP packets through my open HTTP ports.

$ sudo ufw allow 8080/tcp $ sudo ufw allow 8081/tcpFinally, I fired up the firewall.

$ sudo ufw enableI was able to check the status of the firewall with the following command.

$ sudo ufw status All seemed good!

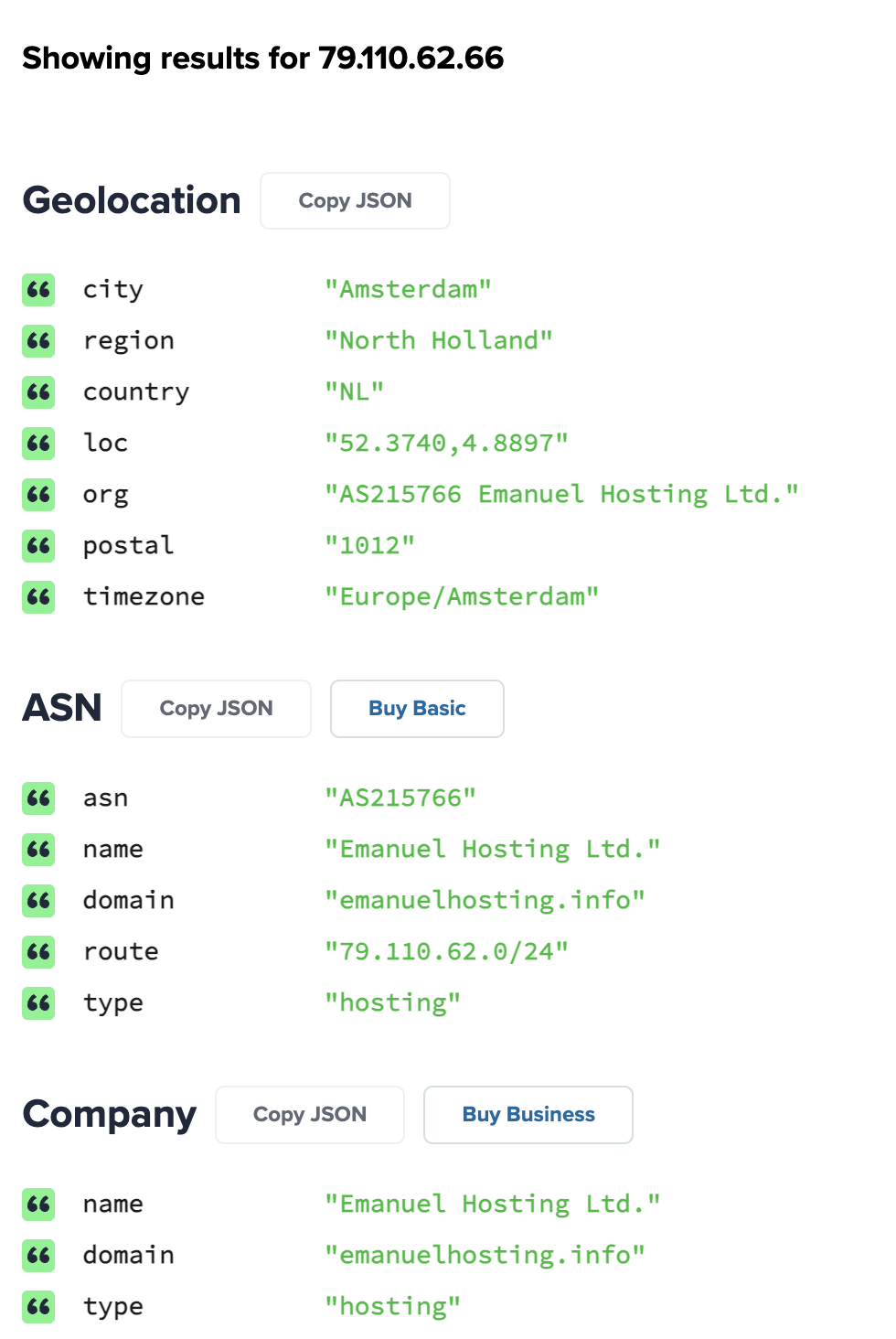

inetnum: 79.110.62.0 - 79.110.62.255 netname: ColinBrown org: ORG-EL451-RIPE country: GB admin-c: SH16229-RIPE tech-c: SH16229-RIPE mnt-routes: EmanuelHostingLTD-mnt mnt-domains: EmanuelHostingLTD-mnt status: ASSIGNED PA mnt-by: MNT-NETIX mnt-by: MNT-NETERRA created: 2024-04-19T07:15:15Z last-modified: 2024-04-19T07:15:15Z source: RIPEorganisation: ORG-EL451-RIPE org-name: Emanuel Hosting Ltd. country: GB org-type: OTHER address: 26 New Kent Road, SE1 6TJ London, England abuse-c: ACRO54984-RIPE mnt-ref: MNT-NETERRA mnt-by: EmanuelHostingLTD-mnt created: 2023-12-14T15:27:18Z last-modified: 2024-08-09T17:20:39Z source: RIPE # Filtere

Nginx is “a high-performance, open-source web server and reverse proxy server that is also commonly used as a load balancer, HTTP cache, and mail proxy. It was originally designed as a web server to handle high concurrency, but over time it has evolved into a powerful multipurpose server.” It’s awesome, simple to use and free.

HTTPS (Hypertext Transfer Protocol Secure) is a secure version of HTTP, which encrypts data transferred between a user’s browser and a website using SSL (Secure Sockets Layer) or its successor TLS (Transport Layer Security). SSL/TLS ensures that sensitive information like passwords and credit card numbers is encrypted, providing authentication and data integrity to protect against eavesdropping and tampering.

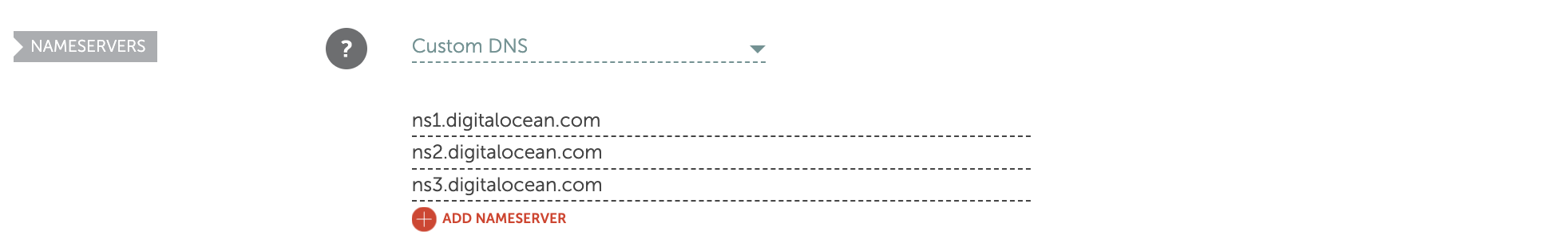

1. Redundancy and Reliability:

Having multiple nameservers ensures that if one server is down or unavailable, the others can still respond to DNS queries. This improves the reliability of your DNS resolution. By configuring multiple nameservers, you reduce the chances of DNS failure affecting your website’s availability.

2. Load Balancing:

With multiple nameservers, DNS queries can be distributed across the different servers, preventing any single server from becoming overwhelmed with traffic. This helps balance the load and ensure that queries are processed quickly.

3. Geographical Distribution:

Nameservers are often distributed across different geographic regions. By using all three nameservers (ns1, ns2, and ns3), you’re ensuring that DNS queries are resolved more quickly and reliably for users around the world, as their requests are routed to the nearest available nameserver.

4. Failover Protection:

If a nameserver becomes unavailable due to maintenance or an outage, the other nameservers can still resolve DNS queries. This failover protection helps maintain the accessibility of your domain even in the event of server issues.